Designing Rubrics for Evaluation

Designing Rubrics for Evaluation

Last week, I attended an Australasian Evaluation Society (AES) workshop on “Foundations of Rubric Design”. It was a thought-provoking workshop. Kystin Martens, the presenter of the workshop, explained and challenged our understanding about rubric design as well as presented some practical tips to develop and use rubrics properly in evaluation. Here are my key takeaway points from the workshop:

Why do we use rubrics in evaluation?

A rubric is a tool or matrix or guide that outlines specific criteria and standards for judging different levels of performance. These days, more and more evaluators are using rubrics to guide their judgement on program performance. Rubrics enable evaluators to transform data from one form to another form, for example from qualitative evidence into quantitative data. Rubrics also provide an opportunity to analyse and synthesis evidence into a general evaluative judgement transparently throughout the evaluation process.

Three systematic steps to create a rubric for evaluation

There are three logical steps to develop a rubric:

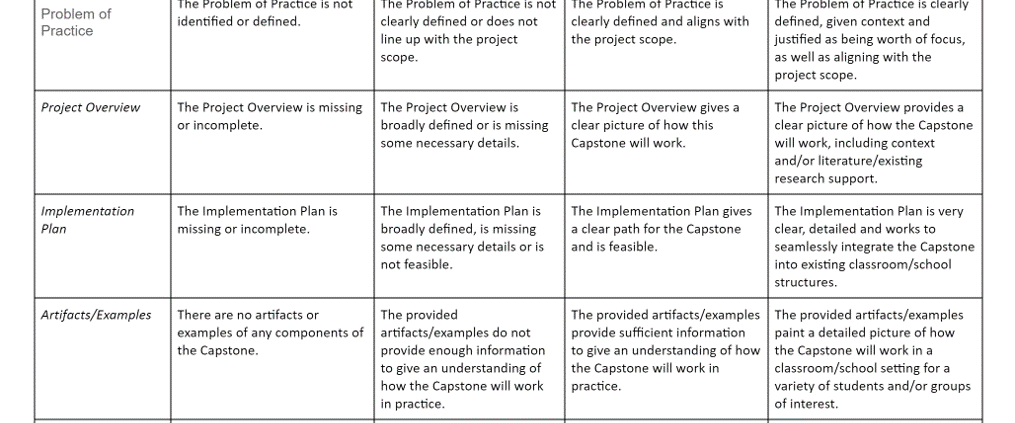

- Establish criteria: criteria are dimensions or essential elements of quality for a given type of performance, for example criteria for a good presentation including content and creativity of the presentation; coherence and organization of the materials; speaking skills and participation/interaction with audience.

- Construct standards: standards are scaled level of performance or gradations of quality or a rating of performance, for example a scale from poor, adequate to excellent or a scale from novice, apprentice, proficient to distinguished.

- Build descriptor for each criterion and standard: descriptor is narrative or detailed description to articulate the level of performance or what the performance at each level of standard looks like, for example a poor speaking skill describes that the presenters were often inaudible and/or hesitant and relied heavily on notes; the presentation went over the required time, and some other descriptions.

Ensuring reliability of judgement and creating gold standards in evaluation

A calibration process is required so all evaluators will assess the program performance consistently and in alignment with the scoring rubric. This process will ensure that all evaluators will produce a similar evaluation score when assessing same program performance. This is a critical process to create gold standards for assessment and increase reliability of the assessment data. For example when we evaluate a multi-country development program and deploy more than one evaluator to assess the program, we need to make sure that all evaluators agree upon the rubric and understand the performance expectations expressed in the rubric thus they are able to interpret and apply the rubric consistently.

If you have any experience using rubrics in evaluation, please share and tweet your experience and thoughts with us @ClearHorizonAU.

References

Tools for Assessment. Retrieved from: https://www.cmu.edu/teaching/assessment/examples/courselevel-bycollege/hss/tools/jeria.pdf

Rubrics: Tools for Making Learning Goals and Evaluation Criteria Explicit for Both Teachers and Learners. Retrieved from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1618692/

Roger, Patricia. Rubrics. Retrieved from: https://www.betterevaluation.org/en/evaluation-options/rubrics